In the last few versions of Magento 2 a few shiny new features regarding robots.txt appeared and they bring some interesting issues with them. In this blog post, I’ll attempt to walk you through all of the different cases you can encounter while modifying the Magento 2 robots.txt file.

Episode I: The Status Code Menace

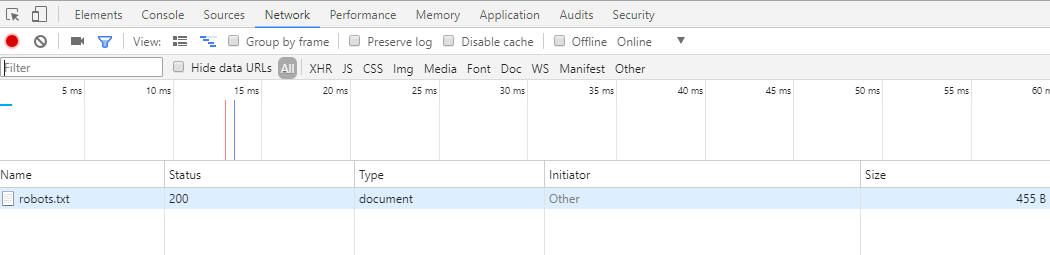

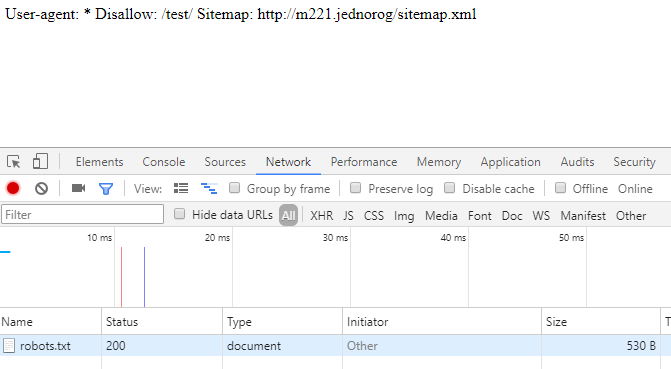

First thing you can notice (if you’re a freak like me that actually checks the status code of most URLs you visit) is that right now, by default if you install a clean Magento 2 and you don’t add any robots.txt file yourself, if you or any search engine bot tries to access the robots.txt file, instead of getting a 404, you’ll actually get a status 200 page:

Notice it also weights some bytes. This robots.txt file doesn’t actually physically exist in the root of your store. It’s actually a dynamically generated file which is generated because some of the new configuration options in Magento 2 admin that I’ll got through with you now.

Why is there a blank dynamically generated status 200 page instead of a 404? Because Magento 2. Can you somehow disable it from admin and get a 404 page instead? No.

Episode II: The Sitemap Injection

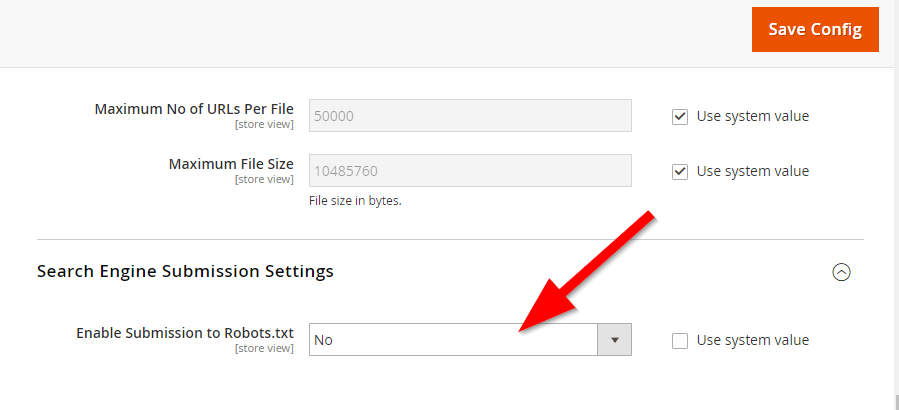

If you navigate to “Store > Configuration > Catalog > XML Sitemap” and scroll down to “Search Engine Submission Settings”, you’ll find a setting thet enables you to add the sitemap: directive to your robots.txt file:

If you enable this, the URL towards your main sitemap index file (the one that contains just two URL-s, the URLs of your actual URL sitemap and image sitemap) will be added to the robots.txt file that’s dynamically generated on your website and if you visit yourstore.com/robots.txt you’ll see something like this:

Sitemap: http://m221.jednorog/sitemap.xml

Episode III: The Content Design Configuration

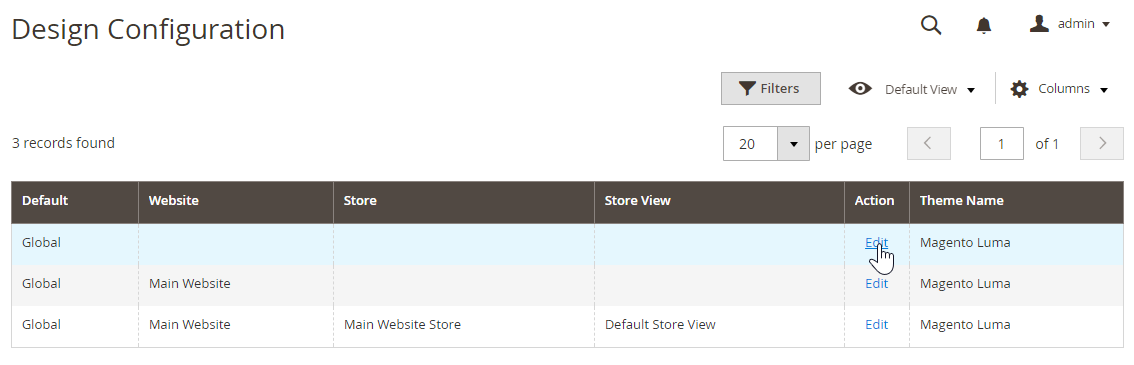

What does robots.txt have to do with design one might ask? Nobody knows but still, let’s navigate to “Content > Design > Configuration”.

In this very logical place, lets edit a global or website scope:

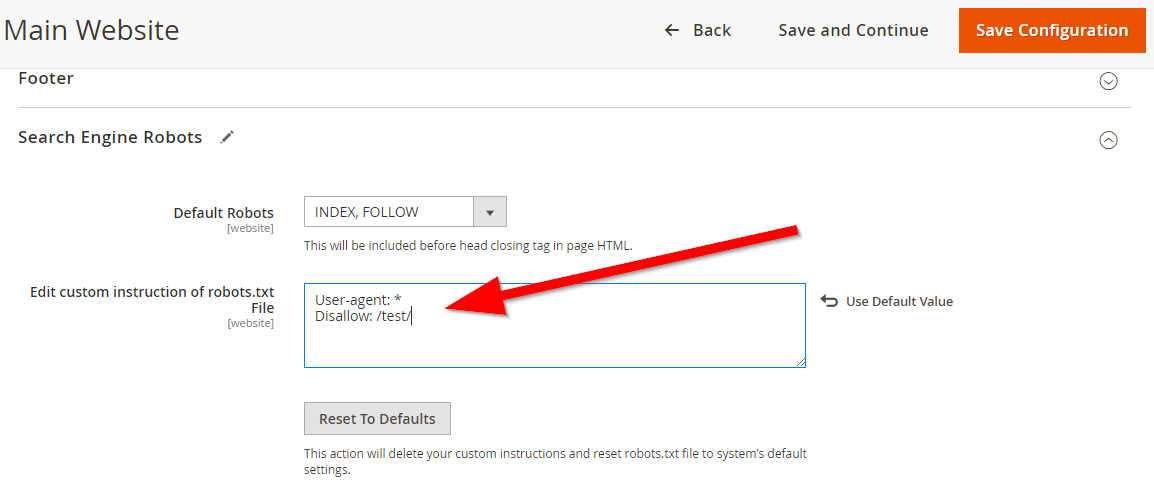

In here you can open an accordion section titled “Search Engine Robots”.

First thing you’ll notice is that these are blank. But why if your file already includes the sitemap directive we enabled in the previous section of this blog post? Because Magento 2.

Lets add a few lines of code like in the screenshot bellow to the robots.txt file and see what happens, shall we?

PRO TIP: If you’re having trouble saving the configuration at this step locally, try this fix, it worked for me. Why? Because Magento 2.

What do we get now on the front-end of the dynamically generated robots.txt file once both sitemap and a custom robots.txt text have been enabled you might ask? How will Magento 2 combine and merge this text and where will the sitemap directive appear? Well… this is what we get:

A miss-formatted robots.txt file that doesn’t go into a new line where it should with a sitemap directive appended at the end of it.

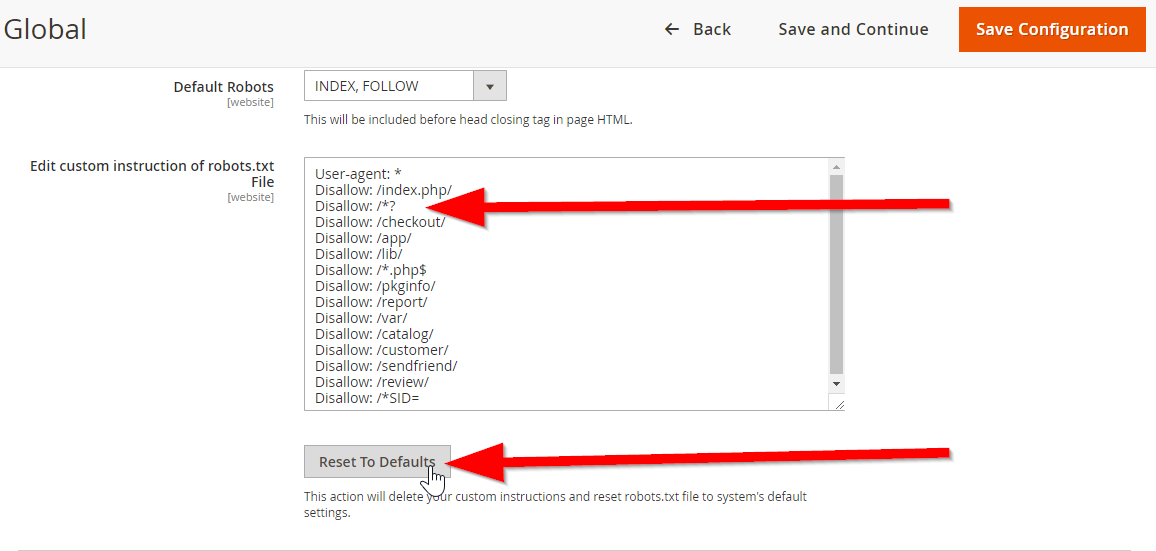

One would expect that clicking a reset to default button would return a blank field as that was the default state we found this in once Magento was installed, right? Wrong. What we get is this:

A badly written boilerplate for Magento 2 robots.txt file that I wouldn’t recommend using as it disallows everything with a parameter on the store.

Episode IV: A New Hope

What happens if we now add a custom robots.txt file that is actually physically present in the root of your store?

It completely overwrites everything we did in the previous steps. It disregards all the text you input in episode III as well as sitemap injection of episode II. And if you wrote it correctly, it works and is formatted correctly.

So to conclude…

At least for now, stick with adding the robots.txt to your Magento 2 stores the old fashioned way – add an actual text file to the root of your store.